Best Appointment Scheduling App in 2024

Discover the ultimate efficiency with our top pick for the best appointment scheduling app in 2024. Streamline your cale...

Enhancing Student Experiences: 3 Ways Higher Education Embraces Scheduling Automation

Revolutionize higher education with scheduling automation. Discover how colleges enhance student experiences through adm...

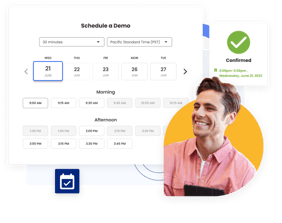

Top Meeting Automation Platforms of 2024: Streamline Your Workflow and Boost Productivity

Uncover the best meeting automation platforms in 2024. Streamline scheduling, enhance productivity with top tools, inclu...

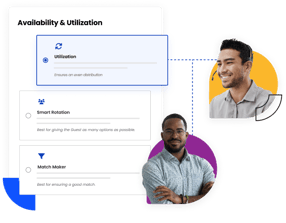

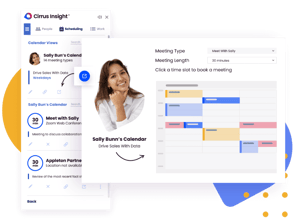

Cirrus Insight Launches Intelligent Customer Scheduling

Cirrus Insight is proud to announce the launch of its latest scheduling innovation, Smart Scheduler.

How to Convert Salesforce Leads in Different Stages

Discover effective strategies for Salesforce lead conversion. Learn to streamline stages and enhance results with Cirrus...

Elevating Your Sales Forecasting Game: Techniques and Tools for Better Accuracy

Learn key sales forecasting methods and tools to enhance your business strategy and decision-making.

10 Actionable Admin Takeaways from Dreamforce 2023

Discover actionable admin insights and strategies from Dreamforce 2023 to optimize your business operations. Don't miss ...

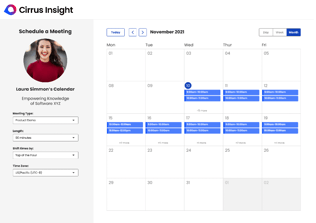

Guide to Team Calendar Scheduling | Cirrus Insight

Maximize productivity & revenue with our ultimate team calendar scheduling guide. Unlock the power of seamless organizat...

The Benefits of Using Team & Calendar Scheduling Tools in Sales

Unlock productivity, boost revenue growth, and streamline collaboration with Team & Calendar scheduling tools. Experienc...

How to Create the Perfect Email to Setup a Meeting

Discover the art of crafting the perfect email to setup a meeting. Master effective strategies from Cirrus Insight for s...